As we plunge deeper into the 21st century, the integration of technology into our lives has become almost seamless. Robotics, artificial intelligence (AI), and business automation are evolving at an unprecedented pace, promising enhanced efficiency and groundbreaking capabilities. However, as we embrace these innovations, it’s essential to thoughtfully explore the AI risks associated with them, especially in the learning domain.

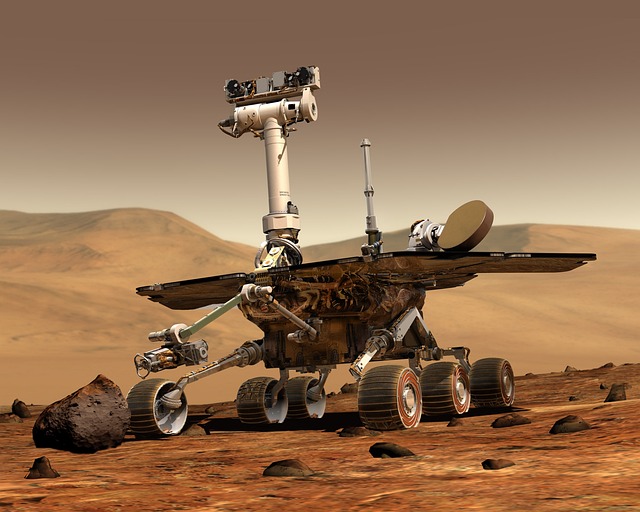

Robotics is revolutionizing industries, from manufacturing to healthcare. While robots offer remarkable precision and can perform tasks beyond human capability, they also carry significant AI risks. In educational settings, for instance, robotic tutors are becoming common, providing tailored learning experiences for students. But what happens when we rely too heavily on these machines? There’s a danger that technological dependency may erode critical thinking skills, as students might begin to favor answers provided by robots over their own conclusions.

Artificial intelligence, a crucial aspect of modern learning, raises its own set of AI risks. AI systems are designed to analyze vast amounts of data, leading to insights that traditionally took humans much longer to derive. However, they can also reinforce existing biases. If an AI algorithm is trained on skewed data, it might perpetuate these biases in its recommendations. In educational contexts, this could mean that students are exposed to a limited worldview, ultimately affecting their comprehension and decision-making abilities. The challenge lies in ensuring that AI systems are designed with fairness and inclusivity in mind.

Furthermore, as we automate business processes, we encounter another layer of AI risks. Automation promises to free up human resources by handling repetitive tasks, but at what cost? The shift to automated systems can lead to job displacement, and this transition in the workforce requires significant learning and adaptation. In academic institutions, educators must prepare students for a future where jobs will constantly evolve alongside technology. There’s an urgent need to incorporate digital literacy and critical thinking into curricula, ensuring that learners not only follow automated systems but can also innovate and adapt.

Moreover, the ethical implications of AI in learning cannot be overlooked. As businesses increasingly utilize AI for training and evaluation, we must ask ourselves: who is responsible for the outcomes these systems produce? If automated tools fail to assess a student’s true potential, or worse, misinterpret data leading to unfair evaluations, the implications are serious. Such instances represent some of the profound AI risks we face as we navigate this changing landscape.

As we explore the potential of robotics, artificial intelligence, and business automation in learning environments, it’s vital to approach these technologies with a critical eye. Embracing their benefits while acknowledging and mitigating the AI risks is crucial for fostering a balanced educational ecosystem. By prioritizing ethical considerations, promoting diversity in AI training data, and equipping future learners with the skills they need to thrive, we can harness the power of technology without losing sight of the human element that underpins education.