Reinforcement learning (RL) has transitioned from a theoretical construct in the 1950s to a cornerstone of contemporary autonomous systems. By allowing an agent to learn optimal behavior through trial and error, RL circumvents the need for exhaustive programming or handcrafted control logic. In robotics, this translates to machines that can adapt to unpredictable environments, while in business automation, RL provides dynamic decision engines that continuously refine workflows and resource allocation. The convergence of RL with advanced sensing, high-performance computing, and cloud infrastructure is now accelerating the deployment of intelligent robots on factory floors, in warehouses, and even in customer-facing roles. This article explores how reinforcement learning is reshaping robotics and business processes, highlighting real-world applications, technical nuances, and the broader implications for industry stakeholders.

The Foundations of Reinforcement Learning in Autonomous Robotics

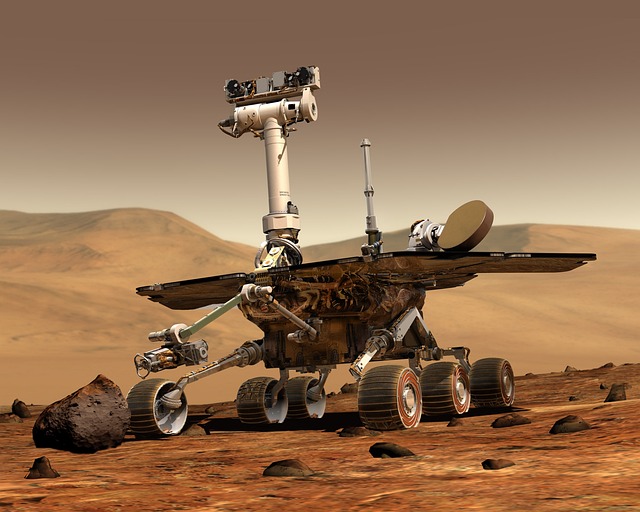

At its core, reinforcement learning revolves around an agent interacting with an environment, receiving observations, taking actions, and receiving rewards. Over repeated episodes, the agent seeks to maximize cumulative reward. In robotic contexts, the environment is often a physical world, the observations are sensor readings such as LiDAR, cameras, or joint encoders, and the actions are motor commands. The reward function is crafted to reflect task success, safety, efficiency, or any combination of performance metrics.

Early RL work focused on discrete action spaces and simple grid worlds. However, modern robotics requires continuous control, high-dimensional perception, and real-time constraints. To meet these demands, researchers have integrated RL with deep neural networks—resulting in deep reinforcement learning (DRL). Techniques such as Deep Q-Networks (DQN), Proximal Policy Optimization (PPO), and Soft Actor-Critic (SAC) have been tailored for robotic hardware, enabling learning from raw images or proprioceptive signals.

- Discrete vs. continuous action spaces: RL algorithms now routinely handle continuous motor outputs.

- Sim-to-real transfer: Simulators like MuJoCo or Gazebo provide inexpensive training environments, with domain randomization bridging the reality gap.

- Sample efficiency: Model-based RL and hybrid approaches reduce the number of real-world trials needed.

Case Study: Autonomous Manipulation in Manufacturing

Consider a robot tasked with assembling electronic components on a crowded circuit board. Traditional programming would require detailed geometric models and exhaustive motion plans. Instead, a reinforcement learning agent can learn to pick, place, and verify components by maximizing a reward that balances speed, accuracy, and collision avoidance. Over thousands of simulated episodes, the agent discovers a policy that is both robust to variations in component placement and resilient to sensor noise.

“By using reinforcement learning, we reduced the assembly time by 35% while maintaining a defect rate below 0.2%.” – Lead Automation Engineer, CircuitTech

Key enablers include high-fidelity physics engines, reinforcement learning libraries (e.g., OpenAI Baselines, RLlib), and hardware-in-the-loop testing to refine policies before full deployment.

Business Automation Powered by Reinforcement Learning

In corporate environments, tasks such as inventory management, dynamic pricing, and customer routing are often governed by rule-based systems. These systems can struggle with shifting demand patterns, supply disruptions, or regulatory changes. Reinforcement learning offers a data-driven alternative: agents learn to make decisions that optimize long-term business objectives, accounting for uncertainty and delayed rewards.

For instance, a warehouse management system can use RL to allocate storage locations, plan pick routes, and schedule replenishment. Rewards may include reduced travel time, balanced aisle load, and compliance with temperature controls. Over time, the RL agent adapts to seasonal spikes, equipment failures, or new product introductions, maintaining optimal throughput without human intervention.

- Dynamic Pricing: Reinforcement learning can continuously adjust prices based on real-time demand, competitor actions, and inventory levels, maximizing revenue while maintaining market share.

- Customer Support Routing: RL agents decide which support tickets to assign to which agents, balancing resolution time, customer satisfaction, and agent expertise.

- Supply Chain Optimization: Policies learned through RL can handle order batching, route planning, and cross-docking decisions in the presence of fluctuating lead times and transport costs.

Integrating RL with Existing Enterprise Systems

Adoption requires careful alignment with legacy infrastructure. Reinforcement learning models can be encapsulated as microservices, exposing APIs that accept state inputs (e.g., current inventory levels) and return action suggestions (e.g., reorder quantities). Data pipelines collect state-action-reward triples for continual learning, ensuring that the agent remains up-to-date with market dynamics.

“The reinforcement learning engine we integrated with our ERP system has slashed order cycle times by 20%.” – Chief Data Officer, RetailCo

Key considerations include data privacy, interpretability, and regulatory compliance. Transparent reward definitions and policy audits help stakeholders trust the system and meet audit requirements.

Challenges and Mitigations in RL Deployment

While reinforcement learning promises significant gains, several hurdles persist:

- Reward Design: Crafting a reward function that captures all aspects of desired behavior is non-trivial. Poorly defined rewards can lead to unintended behaviors.

- Sample Inefficiency: Real-world training can be costly. Techniques like offline RL, where policies learn from pre-collected data, help reduce live interaction.

- Safety and Reliability: Especially in robotics, unsafe exploratory actions can damage equipment or cause accidents. Safe RL methods, such as constrained policy optimization, enforce safety margins during training.

- Computational Resources: Training deep RL models demands GPUs or specialized hardware. Cloud platforms and edge inference solutions mitigate this barrier.

Mitigation strategies involve simulation-first development, incremental deployment, and hybrid systems that combine RL with rule-based safeguards.

Future Directions: Multi-Agent RL and Edge Computing

Modern factories and businesses feature fleets of autonomous agents—robots, drones, and software bots—working in coordination. Multi-agent reinforcement learning (MARL) extends RL to scenarios where multiple agents learn jointly, fostering cooperation or competition. Applications include coordinated warehouse fleets, synchronized manufacturing lines, and collaborative customer service bots.

Edge computing is another transformative trend. By deploying lightweight RL models on edge devices, systems can respond to sensor inputs in milliseconds, critical for high-speed assembly or real-time fraud detection. Edge inference also addresses latency and bandwidth constraints, enabling robust operation in disconnected environments.

Economic Impact and Workforce Implications

Reinforcement learning-driven automation brings measurable economic benefits. According to a recent industry report, companies that deployed RL-based autonomous robotics reported average productivity increases of 25–40% within the first year. Moreover, cost savings stem from reduced labor hours, lower error rates, and optimized resource utilization.

Workforce dynamics also shift. While routine tasks become automated, new roles emerge: RL engineers, data scientists, and policy auditors. Organizations that invest in upskilling employees to manage, interpret, and refine RL systems can maintain competitive advantage and ensure smooth transition.

“Our team shifted from manual process design to focusing on reinforcement learning strategy, and we now lead the industry in autonomous throughput.” – Head of Robotics, AutoDynamics

Ethical and Regulatory Considerations

As autonomous systems take on more decision-making authority, ethical accountability becomes paramount. Reinforcement learning models must align with societal values and legal frameworks. Transparent reward structures, explainable policy reasoning, and continuous monitoring are essential practices. Regulatory bodies are beginning to draft guidelines that address algorithmic fairness, data privacy, and safety certification for RL-enabled systems.

Conclusion: A Paradigm Shift in Automation

Reinforcement learning has matured from a niche research area into a practical engine for autonomous robotics and business automation. Its capacity to learn directly from interaction, optimize for long-term objectives, and adapt to evolving environments positions it as a catalyst for innovation across industries. While challenges around safety, interpretability, and deployment remain, the trajectory is clear: RL will increasingly underpin systems that were once the domain of human expertise.

For businesses contemplating the next step toward automation, the message is unequivocal: investing in reinforcement learning capabilities is not merely a technology upgrade but a strategic imperative to stay ahead in a rapidly digitalizing world.